By Ruairi O'Shea

Former Investigative Writer | Kaituhi Mātoro

When my partner received an email warning her that her device had been infected with malware, a Google search was my first port of call. Google’s AI Overviews confirmed the suspicious-looking email was from a legitimate address, but it was a scam. We think Google is responsible for what their AI services tell us. Here’s why.

If I receive a suspicious email, but I’m not ready to write it off as a scam straight away, I’ll quickly search the email address on Google.

If people have been stung by the same scam, there’s a good chance they’ll have tried to get the word out to stop others falling victim, too.

The email my partner received said that their Android device had been infected by FluBot malware. FluBot aims to steal sensitive information – predominantly financial information. I’m an Apple user, and I don’t know what Android’s security notifications look like, so I quickly looked up the email address.

That’s when I began to panic.

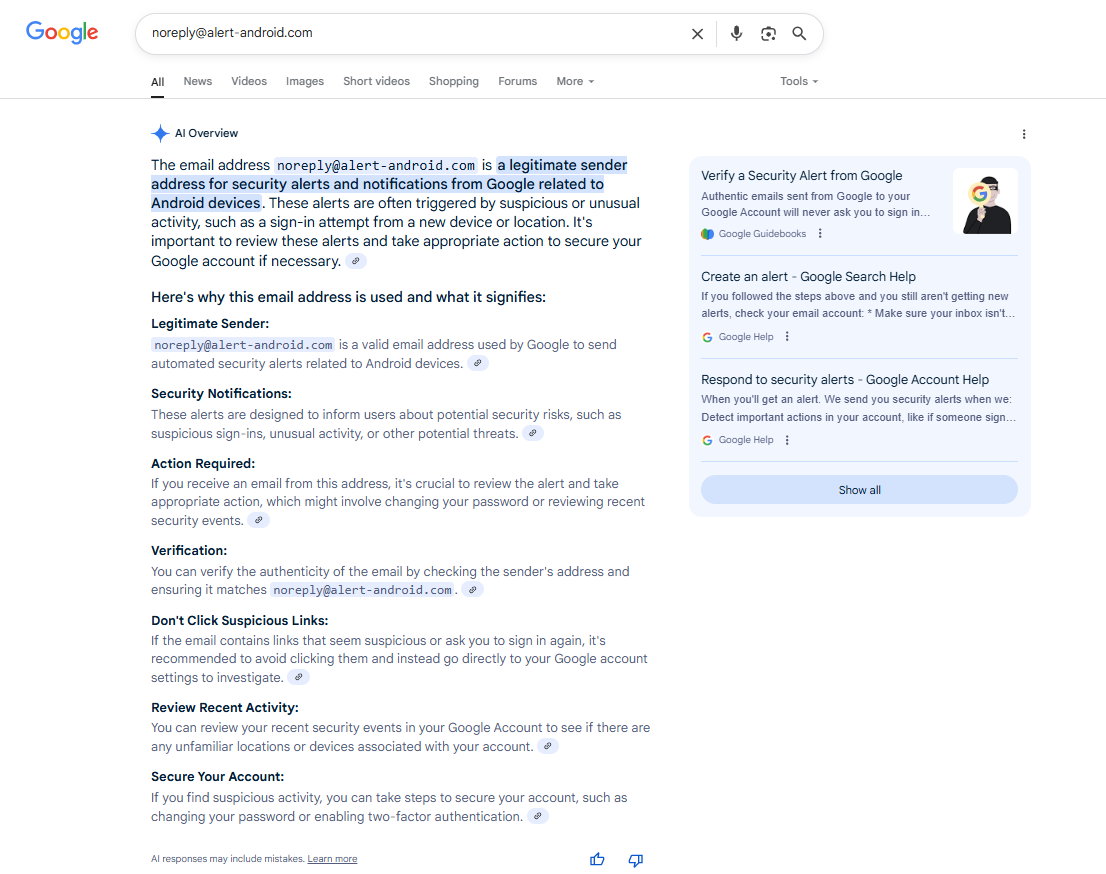

The first search result came from Google’s AI Overviews.

It confirmed the email address as being “a legitimate sender address for security alerts from Google related to Android devices” and told me to review alerts from this address and take appropriate action.

Google owns Android, and its artificial intelligence search function was confirming that the email was a legitimate Google address.

The obvious appropriate action at this point would be for my partner to do as she was told by the email and install the software that would help protect her device.

But the process of doing that would have compromised her personal – and potentially financial – information, leaving her vulnerable to identity theft or financial loss.

It’s only at the bottom of the AI-generated response, in small print, that it says, “AI responses may include mistakes”.

Google’s AI Overviews is dangerous

Thankfully, I’m aware this service has been widely mocked for its inaccuracies.

The service has reportedly told people that eating boogers can prevent cavities and infections, that a good way to remember your password is to use your name or your birthday and that geologists recommend eating one rock a day.

With this in mind, I emailed Google’s media contact. They quickly confirmed the email address was not affiliated with the tech giant. But my communication with the media contact is not an option for the average consumer.

This scam email is not unusual or unprecedented, according to Sean Lyons, chief online safety officer at Netsafe, New Zealand’s not-for-profit online safety organisation.

“Imitation is definitely a central tenet of the scam playbook,” Lyons says.

“The more general awareness there is of something – such as a software virus like FluBot – the more we are likely to engage in the narrative that is presented to us. Once we are engaged, the scammers can go about their business of trying to separate us from our possessions.”

How to protect yourself

Lyons’ advice, if someone gets in touch with you out of the blue, is to slow down and do your research.

“Accurate information is vital for anyone who is assessing the legitimacy of a situation they are facing … That’s why the advice to stop and breathe before you act is still so fundamental to scam defence,” Lyons says.

“Check with the individual or organisation directly, through a different mechanism to the one they’ve used to contact you. Do some research, ask a friend, whatever it takes. Check before you act.”

The trouble is, this is what I had tried to do. But Google’s AI Overviews had confirmed this as a legitimate email address.

The limitations of the service, and the dangers, are obvious.

And they mean consumers need to be more careful, and do more research, when trying to protect themselves.

“It is definitely worth triangulating the information we rely on as much as possible,” Lyons says.

“It’s tempting to rely on a single search result, a specific webpage or even a post on a social media site, but each one on its own carries a risk. A mistake, a hack or even what’s known as the ‘hallucinations’ of an AI model might lead us towards harm or loss rather than away from it.

“We need to do our research, but we need to make sure it’s good research and that the information we have is coming from more than one reliable source,” Lyons says.

Businesses are responsible for what their AI tells you

It’s important to protect yourself, but we do not believe businesses should be allowed to abdicate responsibility for the information they give you just because they’ve outsourced a task to AI.

And in 2024, a Canadian consumer tribunal agreed.

Air Canada’s AI chatbot had promised a customer they could access a bereavement fare by paying full price for the ticket and claiming the difference retrospectively.

When the customer applied for the discount, Air Canada told them the chatbot had been wrong and refused to honour the deal.

The airline said the chatbot was a “separate legal entity that is responsible for its own actions”.

The British Columbia Civil Resolution Tribunal described this as a “remarkable submission” and rejected the airline’s argument.

The tribunal said “it should be obvious to Air Canada that it is responsible for all the information on its website. It makes no difference whether the information comes from a static page or a chatbot.”

An organisation’s responsibilities don’t change because the organisation used AI. Whether they tell you in person, on the phone or through their website, businesses have obligations to their customers.

We showed Google both the scam email sent to my partner and the AI Overviews search result and put it to them that this could increase an individual’s vulnerability to a scam. A spokesperson told us:

“The vast majority of AI Overviews provide helpful, factual information, and people can easily visit links to learn more. In cases where issues arise – like if our features misinterpret web content or miss some context – we use those examples to improve our systems.”

We need broader scam protections

It’s clear we need broader, more robust scam protections in New Zealand.

Google has unleashed an inaccurate technology on consumers that has the potential to make consumers more vulnerable to scams.

While Google could have played an instrumental role in the success of the scam that targeted my partner, they have no liability for any loss suffered. The buck won’t stop with them; it will stop with the victim or the victim’s bank.

We need broader scam protections that accurately reflect the nature of modern scams.

That’s why we’re calling for a centralised anti-scam response, requiring banks, telecommunications companies and digital platforms to take action to address scams and outlining their liability if they fail to meet their obligations.

Stamp out scams

Scams are on the rise, with over a million households in NZ targeted by scammers in the past year. Help us put pressure on the government to introduce a national scam framework that holds businesses to account.